The cloud isn’t really the cloud without some additional functionality beyond the ability of creating a virtual machine to run your software.

The cloud isn’t just somebody else’s data center either. A short definition might be.

A Cloud solution is one where the software solution or service available over the internet and the user has the ability to allocate or deallocate this on own their own without the involvement of IT. Usually the cloud solution can expand or contract as required.

The National Institute of Standards and Technology has a slightly bigger, and much more elegant definition of what Cloud computing is – Cloud computing.

The part of this definition that I will be focusing on today is the ability of a cloud solution to expand or contact as necessary. Amazon refers to this as elasticity and make it possible by allowing you to setup Autoscaling.

Autoscaling

The ability to launch or shutdown an EC2 instance by uising system statistics such as CPU load to determine if more or fewer instances are required.

If it were only that easy in practice. In order to take advantage of autoscaling the programs need to be written in so that it is possible to have multiple programs or processes running independent of each other. This doesn’t have to be a difficult task however, this may be an undertaking for monolithic legacy systems that have certain expectations.

Autoscaling

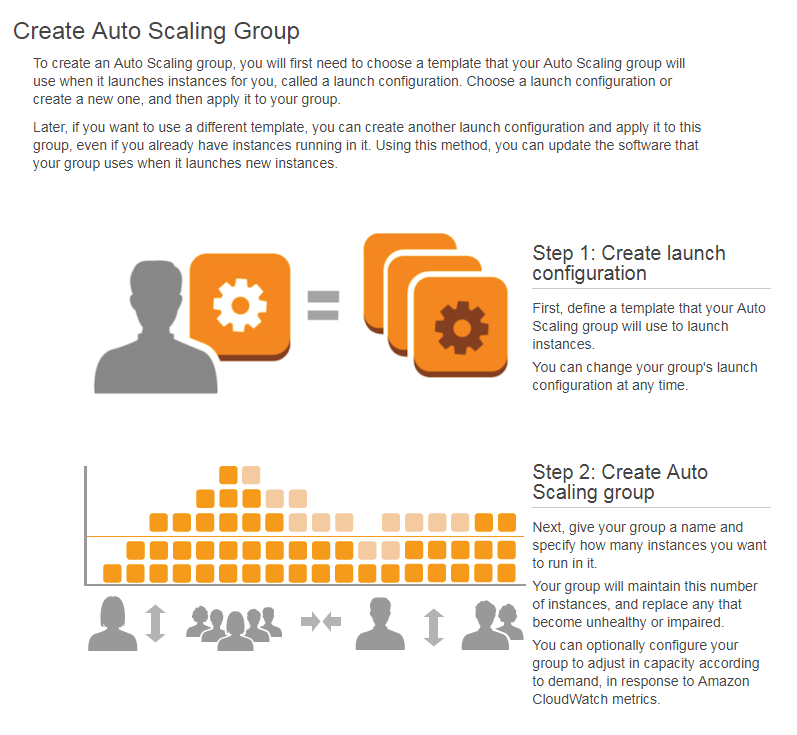

Setting up Autoscaling is a two part process. The first part is to define a launch configuration (ie a template) describing how each machine should be configured. This would probably to use one of your previously created AMI’s which would probably have your most if not all of your software configuration.

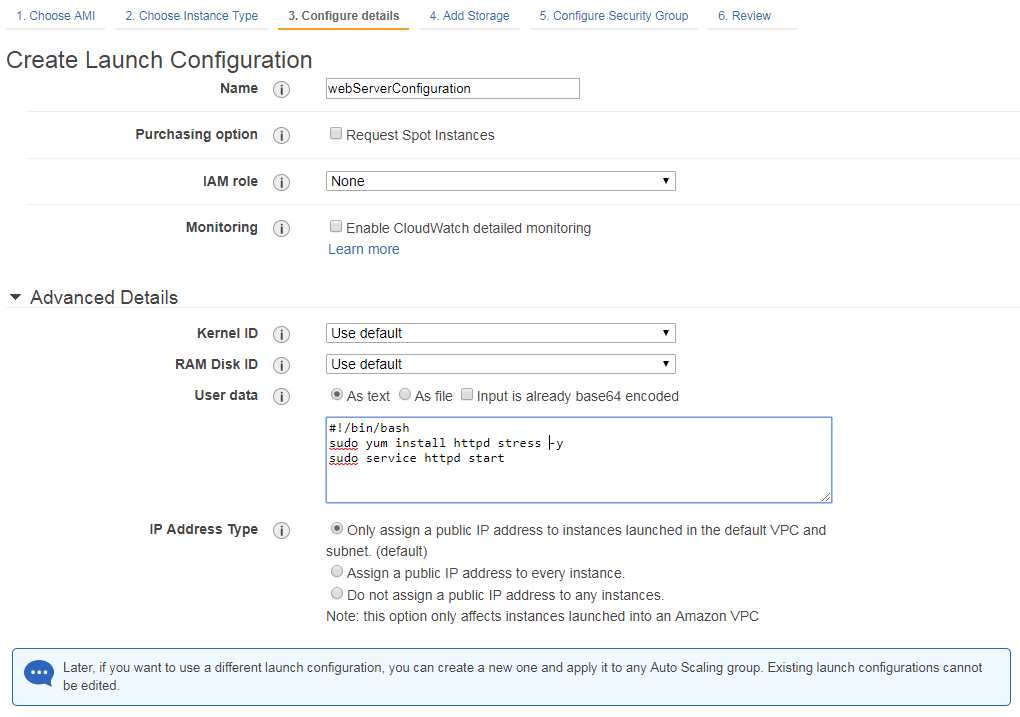

For brevity sake, I will skip a few of the screens for creating the launch creation. The reason is that these steps should be familiar as they are the same as setting up a EC2 instance.

First we give our launch configuration a name.

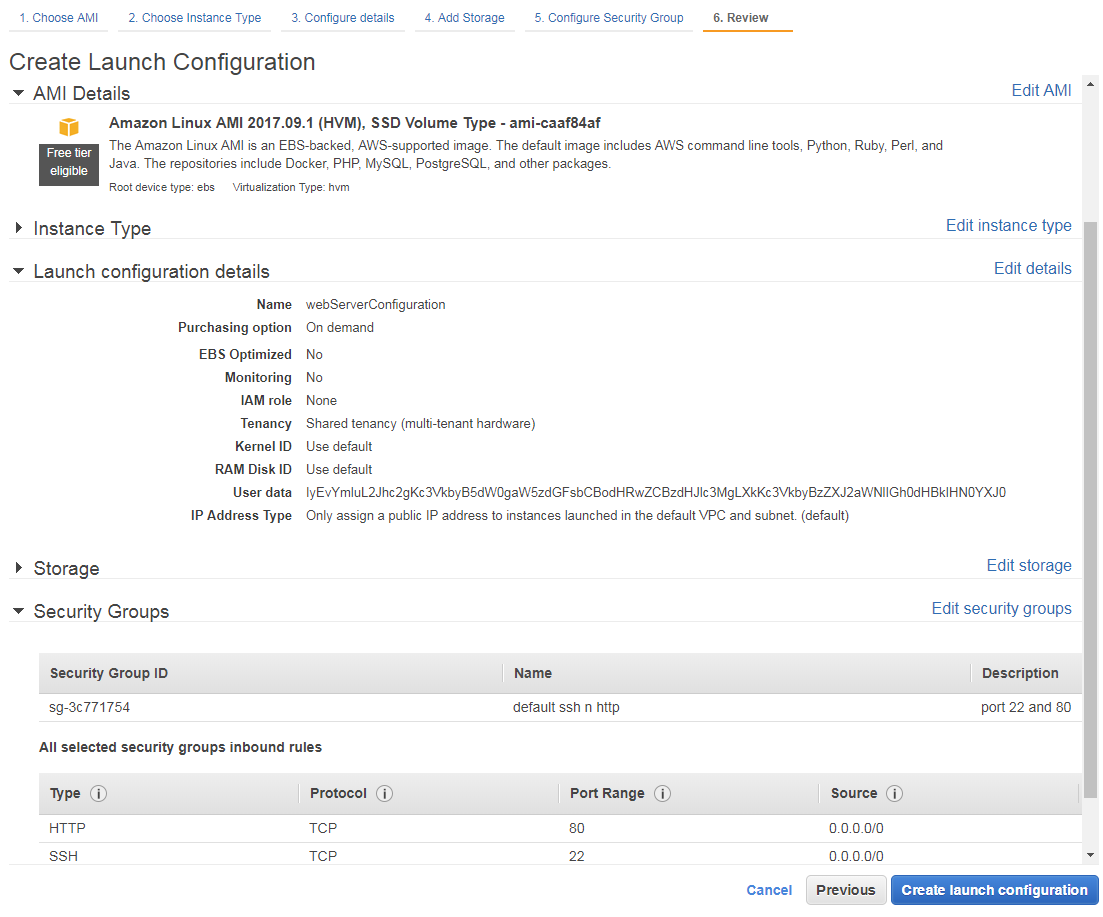

Once everything has been selected, we do a quick verify that all tags, storage and such are correctly defined.

Auto Scaling Group

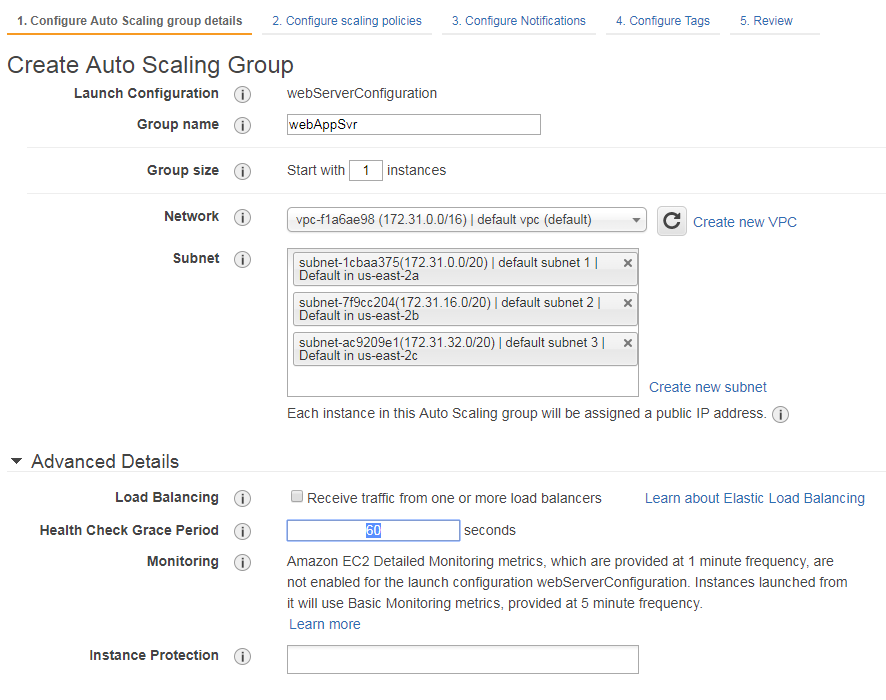

I cannot say if it is good “style” that AWS automatically launches in the creation of a scaling group once your launch configuration has been setup, however, it certainly is convenient.

First you give your auto scaling group a name, decide how many instances should be in the group, pick a network and decide which availability zones will be used when autoscaling. The Amazon literature is pretty specific that for a high availability solution you would want your solutions to span availability zones when possible to counter the unlikely chance that a AZ goes down or becomes unreachable.

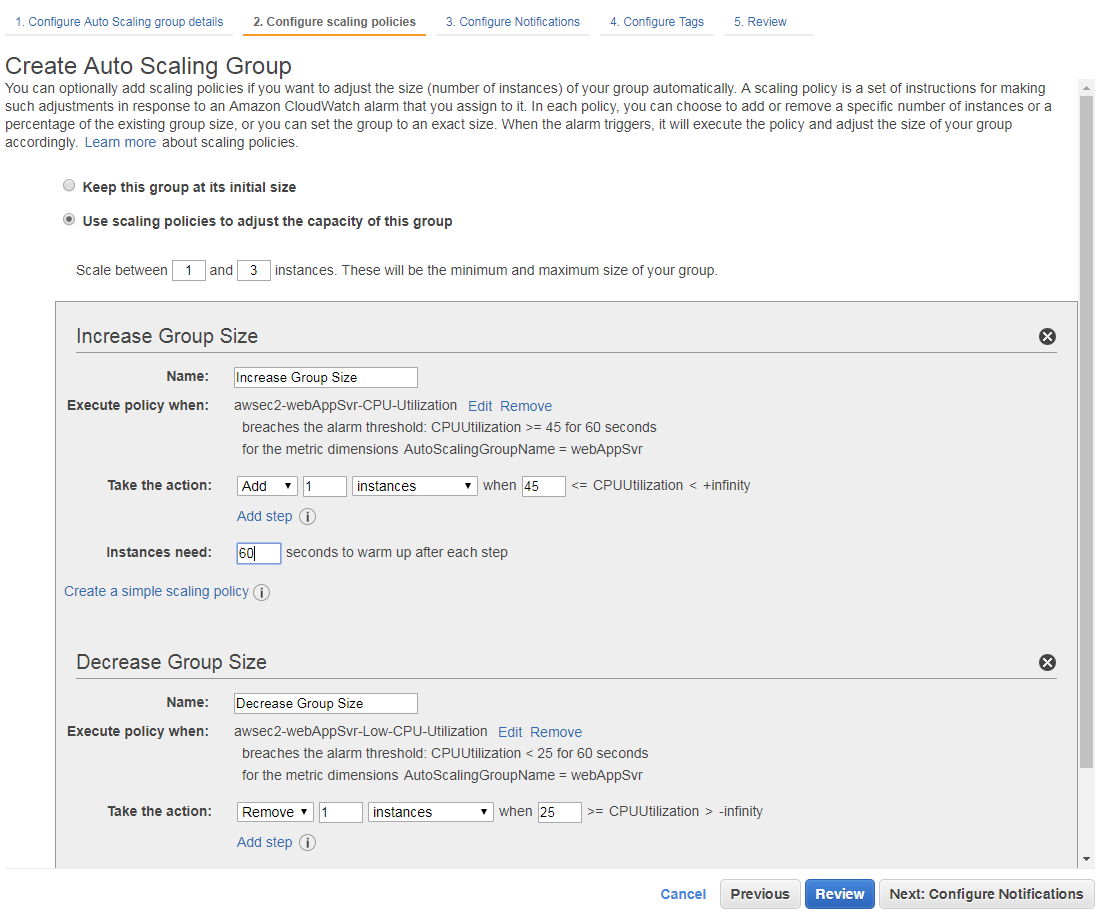

The scaling policies is the location where you get to decide on how big your solution should scale. You do have the opportunity to keep your group size the same as previously defined.

Doing so would be the equivalent of a high availability solution. This guarantees that AWS will launch a new instance if for any reason your existing instance(s) go down.

You also decide what metrics will be used when deciding to increase or decrease the number of instances you have.

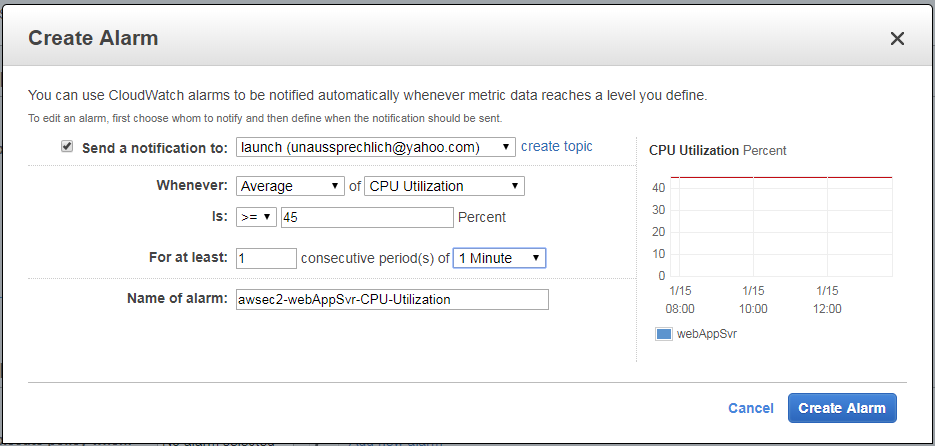

You can see that I have decided that 45% cpu utilization should be the signal to create another EC2 instance.

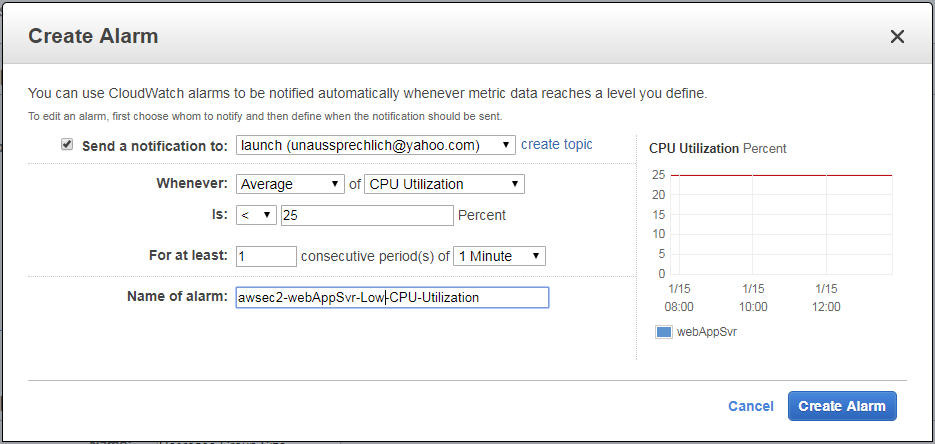

You can also see that if the overall CPU utilization goes below 25% then AWS will decrease the number of EC2 instances that are running.

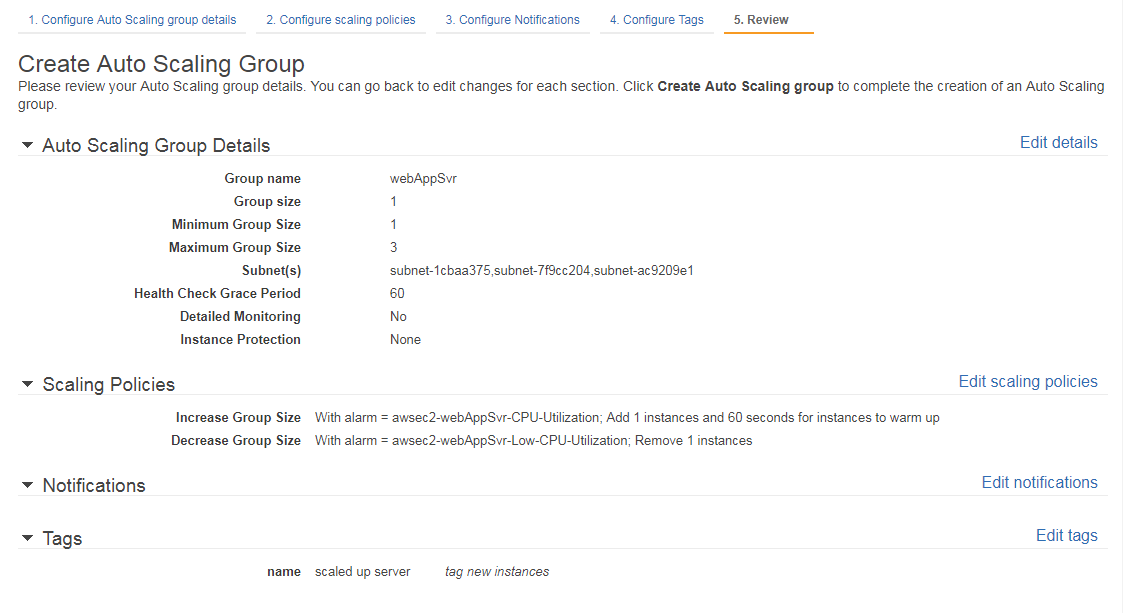

Once you have setup everything for your group (notification and tags not displayed here), then you get a chance to verify that you are satisfied with the setup.

The non-obvious step here is that once you actually create this group then AWS will proceed to create everything for you. This is in one sense exactly what you want, however, it does make it impossible to create the group setup and then trigger once you are ready.