I guess it happened a few years ago when I became a bit more cognizant that those helpful computers were a bit smarter than they first seemed. Perhaps smarter isn’t the right term, perhaps intrusive. The exact incident was when I was sending an email off and had forgotten the attachment. The email service that I was using had analyzed the text and pointed out that I had forgotten the attachment that I had mentioned. Indeed it was nice to get this oversight corrected but it was a bit disconcerting, what other keywords or phrases would my email provider be looking for. Would a question about insurance bring on an avalanche of insurance spam ? What about other topics of a more personal nature? Will I start to get mails about midwives and baby supplies? Perhaps mails about switching gynecologist.

I was actually relieved so far not as not to see anything like that, well based on my emails, I don’t think that this is the case based on my browsing history. It is difficult to have no browsing history but I have tried to no longer be logged into accounts and keep as few cookies as possible. Well, imagine my surprise when I logged into a seldom used email account to see this mail.

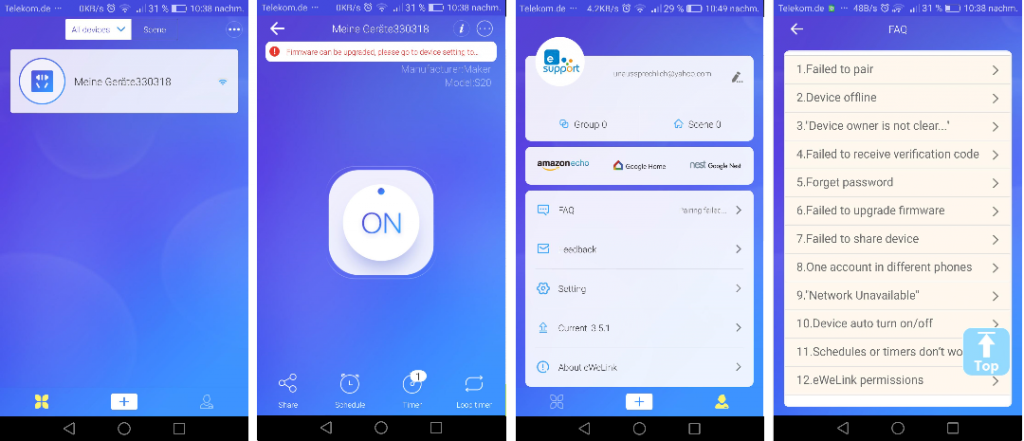

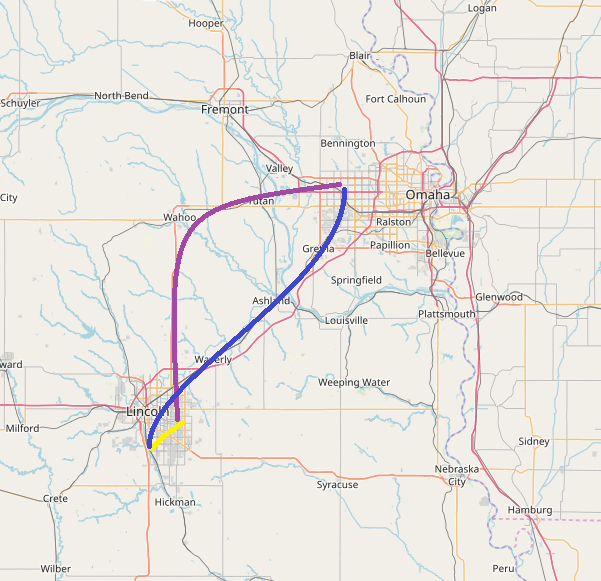

Not really remembering what a google map timeline was I took a look and it was stroll down memory lane. This thing has been on for some time and although it doesn’t have all of my movements I can see in a lot of instances which countries, cities, addresses or businesses I have visited. If you zoom in on a specific day you can see what time you started your journey and from which location. The addresses are amazingly specific and correct, which how long you spent at or near a location and how long it took to get to the next location. If this information would be available to a criminal it would be amazing.

You can probably guess that your “victim” worked at a certain location as they seem to be spending about 8 hours a day between 8 and 6 pm during the week at that location. You can see if that work location is close or far away. If you have somehow gotten this information you might already know that this victim is someone worth robbing but this information might also clarify that. Do they eat out a lot? Do they visit expensive stores or shops? What is the best time of the day or day of the month to “visit” their home. If you were a divorce lawyer this might also look pretty interesting. Your client’s husband claims to simply work late or be out of town on business when he is actually been tracked to a specific address across town during that same frame.

This information might also be quite interesting as a form of corporate espionage, where did the head of global acquisitions go? We know that she deals primarily with automotive industry.

You might be thinking that if I am dumb enough to leave my GPS on as well as enable this feature from Google I deserve all the tracking. This would be true, especially about the enable the feature from Google but it turns out that rarely have my GPS enabled, all of this information appears to have been gathered using either wifi or tracking via the various cell towers of the mobile network.

These markedup map examples are a markup using openstreetmap.org, the actual google maps are much scarier. The google map has wonderful detail listing ATM’s, coffee shops, barbershops, and even links to the various establishments. It is possible to get address, telephone number, opening hours and more.

I really like the openstreetmaps.org site and the information that it does provide but it does pale in comparison to Google Maps.

If this is the type of tracking that can be done with just some passive cooperation I now understand now how during large government investigations are able to have a detailed list of the “criminal” and all of his or her associates as well as detailed movement prior to the crime.

Getting clear of tracking

Well, there is probably no way to be truly clear of being tracked by your cell provider, the phone OS provider or the apps themselves but it is possible to turn off a few of the most direct tracking settings.

- Turn off location collection on your email account.

- Stop collection gps and wifi information

- Remove old history data

1. Gmail

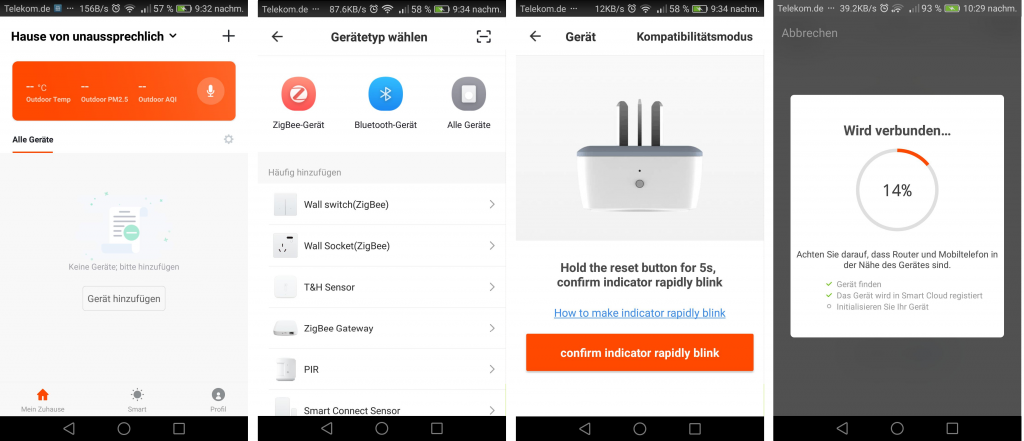

Log into your email account and go to the settings.

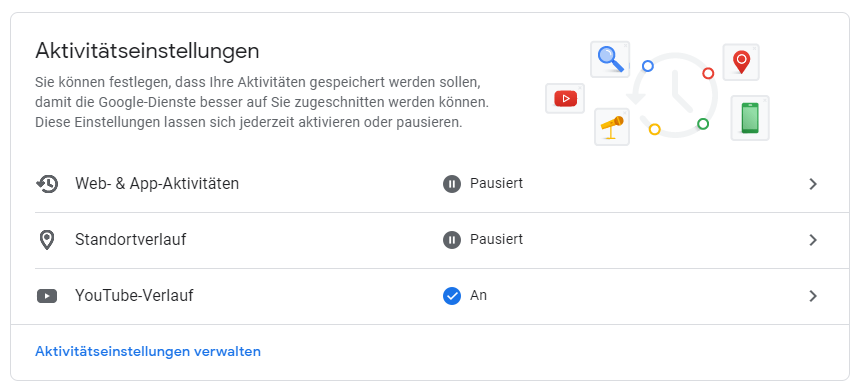

Change the location (Standortverlauf) from On to Off.

This should stop that particular email account from wanting to track you.

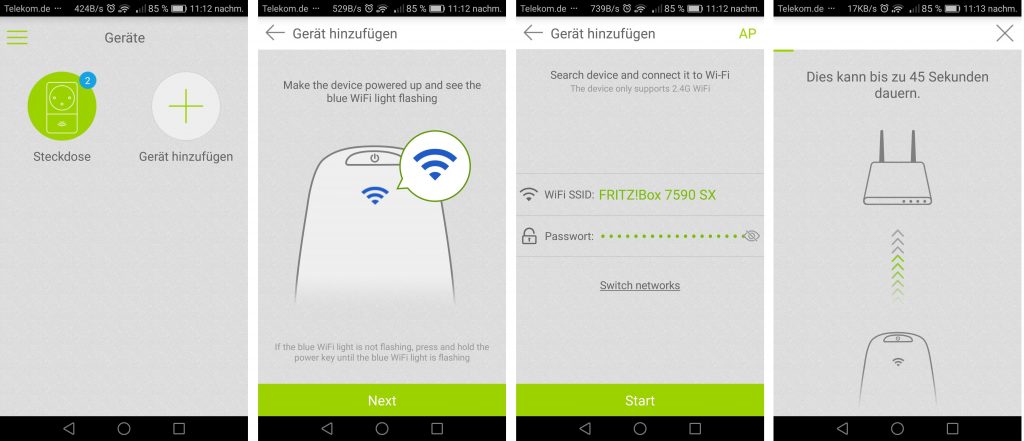

2. turn off tracking on your phone

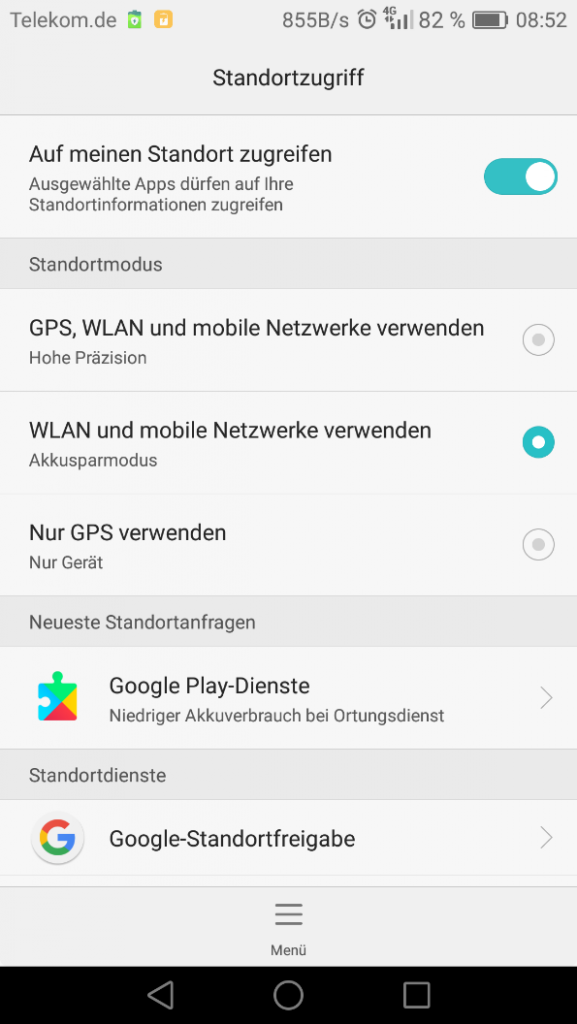

Turn off the collection of data on your smart phone (Auf meinen Standort zugreifen). This has the advantage of not tracking you and may also extend the life of your battery charge.

3. delete the history

I was quite surprised at this one. I was looking at my email account in an attempt to delete this information only to find out that does not seem to be possible. You can delete the history but to do that you need to do it on your cell phone. However, once you delete the history there your timeline as visible from your web browser no longer has any information on your previous travels.

Note: Openstreetmap.org simply provides the maps, they don’t track you and the example above was showing something similar to a Google map. Why not a google map? Well, there are other restrictions of displaying a Google map and I also do not wish to share my location data.