Although docker does have quite a few command options a small subset is all you need for general usage. The number of individual tasks you need is limited the following.

- download an image

- run the image

- remove the image

- build a new image

- monitoring images

Docker has a store that contains both their official containers as well as community supplied containers. The official containers contain a lot of very large well known software programs.

- Microsoft SQL server

- Oracle database

- Oracle Java 8

- WordPress

- Tomcat

- Owncloud

- gcc

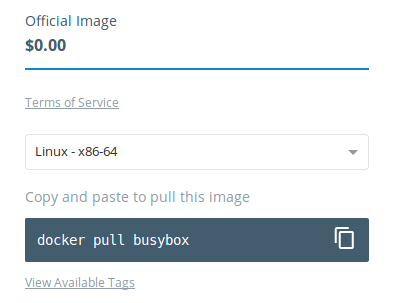

The store is important as it contains a list of the containers that are available and what their name is, however, there is one more really important thing in the store. Each of the containers that are listed have the name of the container to be downloaded but more importantly they contain helpful notes.

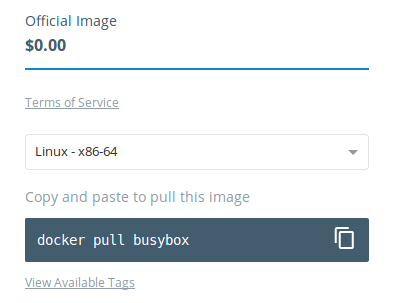

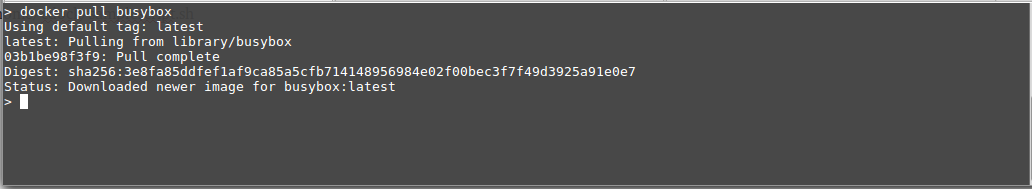

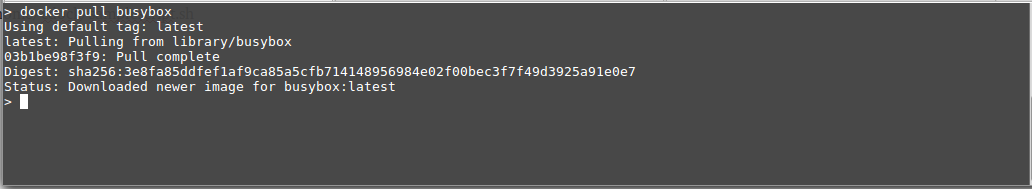

Each container shows the command necessary for pulling down the image to your machine. This is important but other details are listed that might be helpful when actually running the container.

This might be how to run the image, how to extend the existing container to make a new container, descriptions or perhaps the license for this software.

The good news is that all of the entries in the store show the command for retrieving that specific image.

The only inconvenience is that you need elevated privileges to run the docker commands. This is actually as easy as using sudo. Easy but still somewhat inconvenient. Yet this too can be overcome with the tiniest of changes. Quite some time back Docker was changed to have the group docker for the docker daemon. The daemon has ownership of the read/writable for that socket.

Thus the solution is to simply add your user to the docker group. This is either done when creating your user or if your user already exists simply use the usermod command to add the user to the group. This is explained in a really good post at the howtogeek website.

General usage

One of the challenges for production environments as well development environments is to ensure that the exact identical setup exists. The neat thing about docker is that you can pull down all the specific tools by version. You simply provide a host and pull down your containers. If for any reason you need a different version or multiple versions you can run them on the same machine as a container regardless of if this would be possible as a simple install.

Docker has a lot of different command options which can be used to monitor the containers but the most important one is actually running containers. Simply use the run parameter and then the container is run as a normal process. This actually isn’t very different from some installed program running but the containerization makes this a bit different.

Containers are kept segregated from the host and from each other which means that they cannot actually do very much unless you allow the container to interact with system. Docker was not only done really well but also very granular. It is possible to allow the container to access a file, directory or even a port.

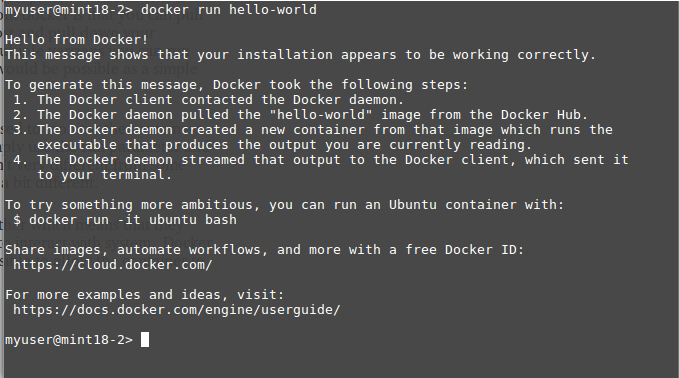

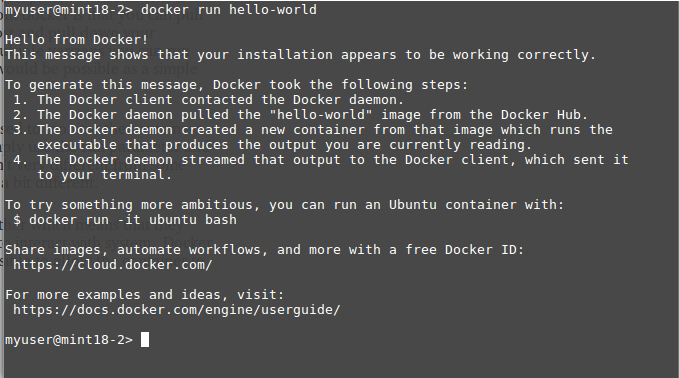

The most simple container does not need any input to the outside world, but those types of jobs would be pretty rare. An example of this is the hello-world container provided by docker.

This example doesn’t save anything to the file system nor read any input from a port.

A more common use case would be to either access a directory or to map a configuration file from the host into a container. This is done by passing in the mapping or mappings by using the -v argument.

-v <host source directory>:<container dest directory>

This can be done with either a directory or just a simple file – in either case the syntax is the same. The same format is used for mapping a port from the host machine to the container.

-p <host port>:<container port>

It is possible to also pass through environment variables to the container as well.

-e <host variable>:<container variable>

Interactive container

In my opinion one of the neatest uses of containers is to run a graphical program like Eclipse. However as the container itself is not persistent Eclipse in a container is not very useful unless it is exposed to the host.

In this case a directory from the host is mapped to the container workspace directory. Simply by mapping the local directory to the container makes Eclipse behave exactly as if it was a normal install on the host machine.

Below is a small script to run the container and map a few directories and an environment variable into the container. This script below is fairly similar to the one in the docker store for this container.

cd ~cdock

mkdir `pwd`/.eclipse-docker >/dev/null 2>&1

myip=`hostname -I | awk -e '{ print ($1)}'`

xhost + $myip

export DISPLAY=$ip:0

CMD="docker run -d --rm -e DISPLAY=$DISPLAY \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-v `pwd`/.eclipse-docker:/home/developer \

-v /home/cdock/workspace:/home/developer/workspace \

fgrehm/eclipse:v4.4.1 "

$CMD

It is not alwtays obvious what host resources need to be mapped into a container. This particular container is just another example why it is useful to look at the notes that are in the store.

The eclipse container is a great example of an user interactive task but not all containers have a gui. It is possible to run a program interactively but that program could also be bash.

docker run -i -t fgrehm/eclipse:v4.4.1 /bin/bash

Monitoring Docker

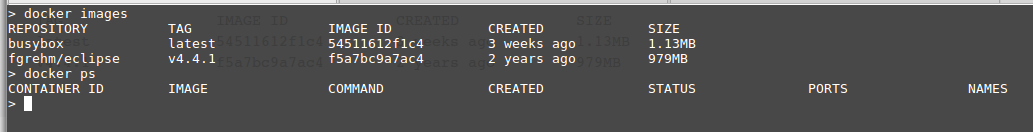

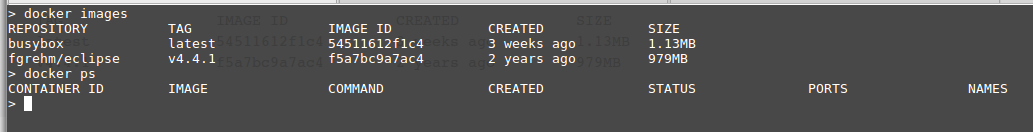

There are two different tasks to monitor. The first is what containers are downloaded to your computer.

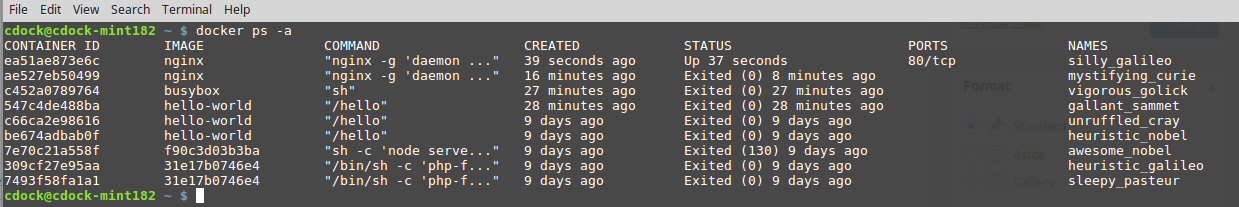

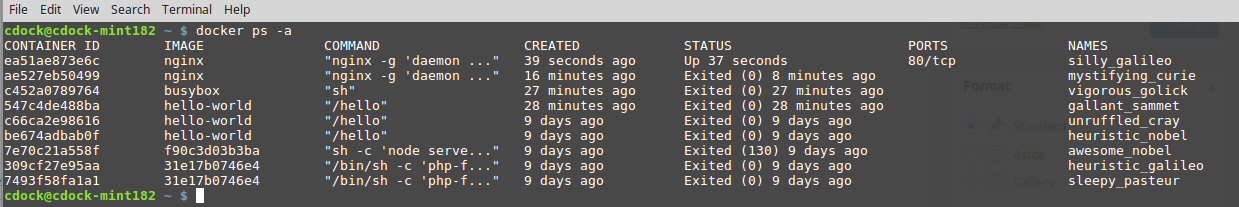

This will however only show the images not which containers are actually running. The “ps” argument, much like the Linux ps command, is used to see which containers are actually running.

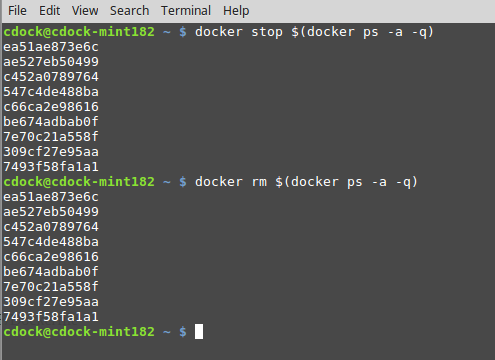

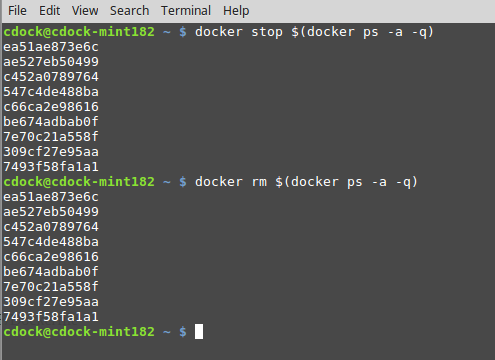

The tasks that are running are probably a daemon or other process that runs in the background. Stopping the process is actually as simple as starting it. Simply ask docker to stop the process for the container id.

docker stop ae527eb50499

After stopping a container it will no longer show up in the list of running tasks. However, if you ask right, you can see a list of the containers that have already been stopped.

It is possible to clean up this list by removing the stopped processes.

If you were limited to pre-created docker containers from the docker store this would still be a very powerful tool, but it is actually possible to either create your own containers or even extend existing containers.

I will talk about that in my next article on docker.